AI in Literacy Education: Potentials and Pitfalls

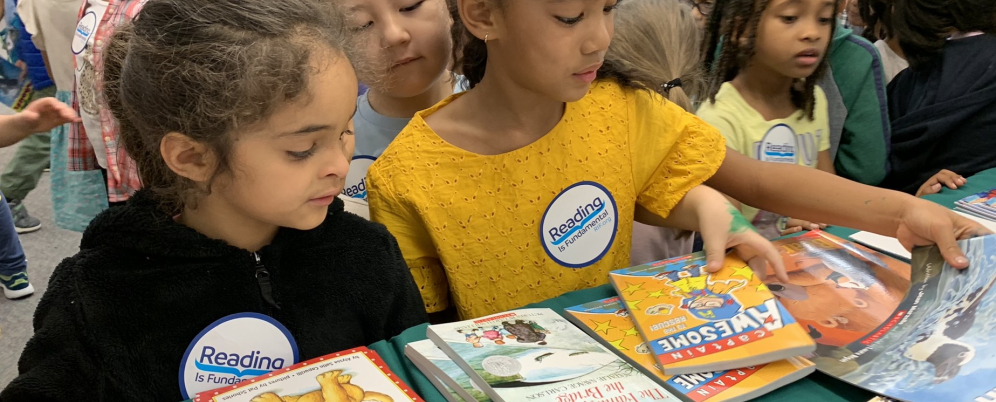

In an ever-changing educational landscape, Reading Is Fundamental (RIF) recognizes that adapting to new and improved technology is critical to ensuring that children can successfully read, learn, and grow. Technologies like Artificial Intelligence (AI) offer seamless access to knowledge and perspective, but encouraging students to rely on it as a tool can have its consequences. Read on to hear RIF’s own Dr. Erin Bailey as she explores the potential benefits and pitfalls of this growing generative technology, as well as ways teachers can leverage it successfully.

Although my current work would suggest that I am a literacy person through and through, I started out during my undergraduate studies minoring in mathematics. On the first day of my introduction to computer programming class the professor said, “Computers are dumb. Humans are smart. Humans tell computers what to do.” That was many years ago, and I now wonder if his statement holds true in the world of generative Artificial Intelligence (AI).

As a Large Language Model (LLM), today’s generative AI has the capacity to generate texts, images, music, and even code, based on the mass amounts of publicly available data, licensed data, and human inputs it has been trained in, making it the fastest growth of technology in history. Key findings from a 2023 national survey by the 51% of teachers reported using generative AI (such as ChatGPT), including 30% for lesson planning, 30% coming up with ideas for classes, and 27% building background knowledge for lessons and classes. Additionally, 33% of students ages 12-17 reported using ChatGPT for school. And, two-thirds (65%) of students and three-quarters (76%) of teachers agree that integrating generative AI for schools will be important for the future.

So, what are some of the pitfalls and potentials that teachers and students should prepare for when using AI this upcoming school year as part of their literacy instruction?

1) Pitfall #1: Plagiarism - A top concern is that students will use AI to generate assignments or plagiarize the work of others.

- Potential: At the heart of this is an opportunity to teach students about what plagiarism is and why it is wrong. Educators can have a class discussion on academic integrity followed by a contract or other form of commitment and guidelines on appropriate uses of AI for assignments. This is also an opportunity for educators to convey to students that learning is about process not product.

2) Pitfall #2: Digital Dependence - Broadly, digital dependence is a concern as students develop an overreliance on technology to the point that they are unable to complete assignments without it.

- Potential: Rather than focusing on the overuse of AI, reframe this as what types of lower order thinking (e.g., summarizing, recall, etc.) AI can handle and use this as an opportunity for students to devote more time to higher order thinking (e.g., analysis, synthesis, and evaluation). For example, in writing, AI can be used to create an outline, spark ideas, or check grammar, allowing students to spend more time on the creative process of writing.

3) Pitfall #3: False Information - Generative AI does not have the capability to match relevant sources of information to a given topic and, therefore, may generate false or fabricated information.

- Potential: This requires explicit teaching about credibility, reliability, and points of view - all of which are necessary skills for students to develop to be critical consumers of media. Additionally, working with students on prompt design helps them learn how to appropriately and effectively use AI and be critical thinkers with technology. This demonstrates for students that the value of the output they receive from AI is dependent upon the design of their input. Providing opportunities for students to compare AI responses also helps illustrate that the information they receive is contingent upon their prompt design.

4) Pitfall #4: Linguistic Biases - Particularly for educators working with diverse student populations, such as multilingual learners, it is important to remember that generative AI is an LLM, trained to generate text similar to human-generated text drawing from dominant forms of English and written expression and, therefore, is not a neutral tool.

- Potential: Be critical, play with language, and make it your own. Remember that AI learns through inputs, so the more students engage using linguistic expressions and syntax that feels authentic to them, the more AI will learn to respond in these ways.

So, I’ll return to my professor’s statement, but reframe it as a question. Are computers dumb? Do humans still tell computers what to do? But even more importantly, as an educator how can you empower your students to think about these questions as they engage with AI throughout their learning and reading journey this school year and beyond?

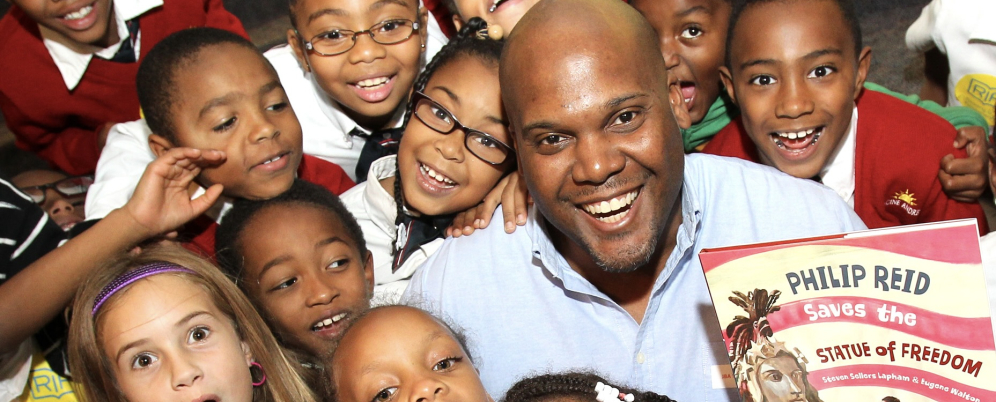

Dr. Erin Bailey is a former classroom teacher and literacy specialist. She currently serves as the Vice President of Literacy and Content at Reading Is Fundamental. Her research explores learning through informal spaces such as public gardens, art museums, and social movements.